Data Structure and Algorithms

Table of Contents

Data Structure

Data Structures and Algorithms (DSA) form the foundation of problem-solving in computer science. Mastering DSA helps you write efficient and optimized code.

Though concepts remain the same across languages, your choice of language can shape the learning experience.

Let's explore how to get started with DSA in different languages and the advantages each one offers.

Regardless of your chosen language, the key is mastering the core concepts and practicing regularly.

Each language offers unique tools and approaches that can help you gain a deeper understanding of DSA. Start small, build your skills gradually, and apply what you learn through consistent problem-solving.

What is a data structure?

Data structure is a storage that is used to store and organize data. It is a way of arranging data on a computer so that it can be accessed and updated efficiently.

A data structure is a specialized format for organizing, processing, retrieving and storing data. There are several basic and advanced types of data structures, all designed to arrange data to suit a specific purpose. Data structures make it easy for users to access and work with the data they need. Most importantly, data structures frame the organization of information so that machines and humans can better understand it.

In computer science and computer programming, a data structure might be selected or designed to store data for the purpose of using it with various algorithms -- commonly referred to as data structures and algorithms (DSA). In some cases, the algorithm's basic operations are tightly coupled to the data structure's design. Each data structure contains information about the data values; relationships between the data; and, in some cases, functions that can be applied to the data.

For instance, in an object-oriented programming language, the data structure and its associated methods are bound together as part of a class definition. In non-object-oriented languages, there might be functions defined to work with the data structure, but they aren't technically part of the data structure.

Why are data structures important?

Typical base data types, such as integers or floating-point values, that are available in most computer programming languages are generally insufficient to capture the logical intent for data processing and use. Yet applications that ingest, manipulate and produce information must understand how data should be organized to simplify processing. Data structures bring together the data elements in a logical way and facilitate the effective use, persistence and sharing of data. They provide a formal model that describes the way the data elements are organized.

Data structures are the building blocks for more sophisticated applications. They're designed by composing data elements into a logical unit representing an abstract data type that has relevance to the algorithm or application. An example of an abstract data type is a customer name that's composed of the character strings for first name, middle name and last name.

It's not only important to use data structures, but it's also important to choose the proper data structure for each task. Choosing an ill-suited data structure could result in slow runtimes or unresponsive code.

Five factors to consider when picking a data structure include the following:

What kind of information will be stored?

How will that information be used?

Where should data persist or be kept after it's created?

What is the best way to organize the data?

What aspects of memory and storage reservation management should be considered?

How are data structures used?

In general, data structures are used to implement the physical forms of abstract data types. Data structures are a crucial part of designing efficient software. They also play a critical role in algorithm design and how those algorithms are used within computer programs.

Early programming languages, such as Fortran, C and C++, let programmers define their own data structures. Today, many programming languages include an extensive collection of built-in data structures to organize code and information. For example, Python lists and dictionaries and JavaScript arrays and objects are common coding structures used for storing and retrieving information.

Software engineers use algorithms that are tightly coupled with the data structures, such as lists, queues and mappings from one set of values to another. This approach can be fused in a variety of applications, including managing collections of records in a relational database and creating an index of those records using a data structure called a binary tree.

Examples of how data structures are used include the following:

Storing data. Data structures are used for efficient data persistence, such as specifying the collection of attributes and corresponding structures used to store records in a database management system.

Managing resources and services. Core operating system resources and services are enabled using data structures such as linked lists for memory allocation, file directory management and file structure trees, as well as process scheduling queues.

Data exchange. Data structures define the organization of information shared between applications, such as TCP/IP packets.

Ordering and sorting. Data structures such as binary search trees -- also known as an ordered or sorted binary tree -- provide efficient methods of sorting objects, such as character strings used as tags. With data structures such as priority queues, programmers can manage items organized according to a specific priority.

Indexing. Even more sophisticated data structures such as B-trees are used to index objects, such as those stored in a database.

Searching. Indexes created using binary search trees, B-trees or hash tables speed the ability to find a specific sought-after item.

Scalability. Big data applications use data structures for allocating and managing data storage across distributed storage locations, ensuring scalability and performance. Certain big data programming environments -- such as Apache Spark -- provide data structures that mirror the underlying structure of database records to simplify querying.

Characteristics of data structures

Data structures are often classified by their characteristics:

Linear or non-linear. This describes whether the data items are arranged in sequential order, such as with an array, or in an unordered sequence, such as with a graph.

Homogeneous or heterogeneous. This describes whether all data items in a particular repository are of the same type. One example is a collection of elements in an array, or of various types, such as an abstract data type defined as a structure in C or a class specification in Java.

Static and dynamic. This describes how the data structures are compiled. Static data structures have fixed sizes, structures and memory locations at compile time. Dynamic data structures have sizes, structures and memory locations that can shrink or expand, depending on the use.

Data types

If data structures are the building blocks of algorithms and computer programs, the primitive -- or base -- data types are the building blocks of data structures. The typical base data types include the following:

Boolean stores logical values that are either true or false.

Integer stores a range on mathematical integers, or counting numbers. Different sized integers hold a different range of values. A signed 8-bit integer holds values from -128 to 127, and an unsigned long 32-bit integer holds values from 0 to 4,294,967,295.

Floating-point numbers store a formulaic representation of real numbers.

Fixed-point numbers are used in some programming languages and hold real values but are managed as digits to the left and the right of the decimal point.

Character uses symbols from a defined mapping of integer values to symbols.

Pointers are reference values that point to other values.

String is an array of characters followed by a stop code -- usually a "0" value -- or is managed using a length field that is an integer value.

Types of data structures

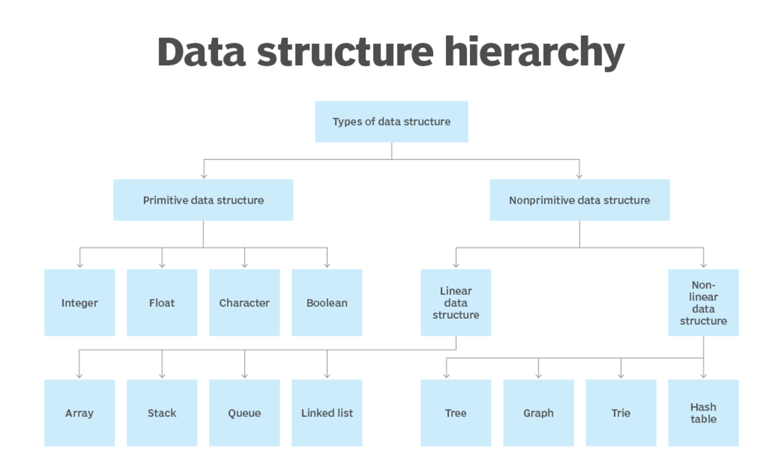

Basically, data structures are divided into two categories:

Linear data structure

Non-linear data structure

Let's learn about each type in detail.

The data structure type used in a particular situation is determined by the type of operations that will be required or the kinds of algorithms that will be applied. The various common data structures include the following:

Array. An array stores a collection of items at adjoining memory locations. Items that are the same type are stored together so the position of each element can be calculated or retrieved easily by an index. Arrays can be fixed or flexible in length.

Stack. A stack stores a collection of items in the linear order that operations are applied. This order could be last in, first out (LIFO) or first in, first out (FIFO).

Queue. A queue stores a collection of items like a stack. However, the operation order can only be FIFO.

Linked list. A linked list stores a collection of items in a linear order. Each element, or node, in a linked list contains a data item, as well as a reference, or link, to the next item in the list.

Tree. A tree stores a collection of items in an abstract, hierarchical way. Each node is associated with a key value, with parent nodes linked to child nodes, or subnodes. There's one root node that is the ancestor of all the nodes in the tree. Moving down through such a tree structure, from the root node, to access all subsequent nodes is called traversal and can be done in a variety of orders, some of which can affect the performance of the tree DSA.

Heap. A heap is a tree-based structure in which each parent node's associated key value is greater than or equal to the key values of any of its children's key values.

Graph. A graph stores a collection of items in a nonlinear fashion. Graphs are made up of a finite set of nodes, also known as vertices, and lines that connect them, also known as edges. These are useful for representing real-world systems such as computer networks.

Trie. A trie, also known as a keyword tree, is a data structure that stores strings as data items that can be organized in a visual graph.

Hash table. A hash table -- also known as a hash map -- stores a collection of items in an associative array that plots keys to values. A hash table uses a hash function to convert an index into an array of buckets that contain the desired data item. Hashing serves up a complexity of its own with a collision – two keys resulting in the same value, where a new key maps to an already-occupied location in the table. Chaining resolves this by generating a local linked list to store each of the elements hashed into the same location.

These are considered complex data structures, as they can store large amounts of interconnected data.

How to choose a data structure

When selecting a data structure for a program or application, developers should consider their answers to the following questions:

What functions and operations does the program need?

What level of computational performance is tolerable? For speed, a data structure whose operations execute in time linear to the number of items managed -- using Big O notation: O(n) -- will be faster than a data structure whose operations execute in time proportional to the square of the number of items managed -- O(n^2).

How long does it take an algorithm to process data within a structure (i.e., how long does it take a program to process a given input)? Time complexity is often a factor when selecting structures appropriate for machine learning applications.

Is the organization of the data structure and its functional interface easy to use?

Is the deletion of data stored in a data structure straightforward? There are times when this, too, can be complex. For example, in linked lists, items can be deleted by deleting a key, or by deleting a key in a specific position.

How easy is it to visualize the data structure? This can be an important criterion in selection, as the graphing of data is generally useful in understanding real-life problem-solving.

Some real-world examples include the following:

Linked lists are best if a program is managing a collection of items that don't need to be ordered, constant time is required for adding or removing an item from the collection, and increased search time is OK.

Stacks are best if the program is managing a collection that needs to support a LIFO order.

Queues should be used if the program is managing a collection that needs to support a FIFO order.

Binary trees are good for managing a collection of items with a parent-child relationship, such as a family tree.

Binary search trees are appropriate for managing a sorted collection where the goal is to optimize the time it takes to find specific items in the collection.

Graphs work best if the application will analyze connectivity and relationships among a collection of individuals in a social media network.

Why Learn Data Structures and Algorithms?

This article is for those who have just started learning algorithms and wondered how impactful it will be to boost their career/programming skills. It is also for those who wonder why big companies like Google, Facebook, and Amazon hire programmers who are exceptionally good at optimizing Algorithms.

What are Algorithms?

Informally, an algorithm is nothing but a mention of steps to solve a problem. They are essentially a solution.

For example, an algorithm to solve the problem of factorials might look something like this:

Problem: Find the factorial of n

Initialize fact = 1 For every value v in range 1 to n: Multiply the fact by v fact contains the factorial of n

Here, the algorithm is written in English. If it was written in a programming language, we would call it to code instead. Here is a code for finding the factorial of a number in C++.

int factorial(int n) { int fact = 1; for (int v = 1; v <= n; v++) { fact = fact * v; } return fact; }

Programming is all about data structures and algorithms. Data structures are used to hold data while algorithms are used to solve the problem using that data.

Data structures and algorithms (DSA) goes through solutions to standard problems in detail and gives you an insight into how efficient it is to use each one of them. It also teaches you the science of evaluating the efficiency of an algorithm. This enables you to choose the best of various choices.

Use of Data Structures and Algorithms to Make Your Code Scalable

Time is precious.

Suppose, Alice and Bob are trying to solve a simple problem of finding the sum of the first 1011 natural numbers. While Bob was writing the algorithm, Alice implemented it proving that it is as simple as criticizing Donald Trump.

Algorithm (by Bob)

Initialize sum = 0 for every natural number n in range 1 to 1011(inclusive): add n to sum sum is your answer

Code (by Alice)

int findSum() { int sum = 0; for (int v = 1; v <= 100000000000; v++) { sum += v; } return sum; }

Alice and Bob are feeling euphoric of themselves that they could build something of their own in almost no time. Let's sneak into their workspace and listen to their conversation.

Alice: Let's run this code and find out the sum. Bob: I ran this code a few minutes back but it's still not showing the output. What's wrong with it?

Oops, something went wrong! A computer is the most deterministic machine. Going back and trying to run it again won't help. So let's analyze what's wrong with this simple code.

Two of the most valuable resources for a computer program are time and memory.

The time taken by the computer to run code is:

Time to run code = number of instructions * time to execute each instruction

The number of instructions depends on the code you used, and the time taken to execute each code depends on your machine and compiler.

In this case, the total number of instructions executed (let's say x) are x = 1 + (10^11 + 1) + (10^11) + 1, which is x = 2 * 10^11 + 3

Let us assume that a computer can execute y = 108 instructions in one second (it can vary subject to machine configuration). The time taken to run above code is

Time to run y instructions = 1 second Time to run 1 instruction = 1 / y seconds

Time to run x instructions = x (1/y) seconds = x / y seconds Hence,

Time to run the code = x / y = (2 10^11 + 3) / 108 (greater than 33 minutes)

Is it possible to optimize the algorithm so that Alice and Bob do not have to wait for 33 minutes every time they run this code?

I am sure that you already guessed the right method. The sum of first N natural numbers is given by the formula:

Sum = N * (N + 1) / 2

Converting it into code will look something like this:

int sum(int N) { return N * (N + 1) / 2; }

This code executes in just one instruction and gets the task done no matter what the value is. Let it be greater than the total number of atoms in the universe. It will find the result in no time.

The time taken to solve the problem, in this case, is 1/y (which is 10 nanoseconds). By the way, the fusion reaction of a hydrogen bomb takes 40-50 ns, which means your program will complete successfully even if someone throws a hydrogen bomb on your computer at the same time you ran your code. :)

Note: Computers take a few instructions (not 1) to compute multiplication and division. I have said 1 just for the sake of simplicity.

More on Scalability

Scalability is scale plus ability, which means the quality of an algorithm/system to handle the problem of larger size.

Consider the problem of setting up a classroom of 50 students. One of the simplest solutions is to book a room, get a blackboard, a few chalks, and the problem is solved.

But what if the size of the problem increases? What if the number of students increased to 200?

The solution still holds but it needs more resources. In this case, you will probably need a much larger room (probably a theater), a projector screen and a digital pen.

What if the number of students increased to 1000?

The solution fails or uses a lot of resources when the size of the problem increases. This means, your solution wasn't scalable.

What is a scalable solution then?

Consider a site like Khanacademy, millions of students can see videos, read answers at the same time and no more resources are required. So, the solution can solve the problems of larger size under resource crunch.

If you see our first solution to find the sum of first N natural numbers, it wasn't scalable. It's because it required linear growth in time with the linear growth in the size of the problem. Such algorithms are also known as linearly scalable algorithms.

Our second solution was very scalable and didn't require the use of any more time to solve a problem of larger size. These are known as constant-time algorithms.

Memory is expensive

Memory is not always available in abundance. While dealing with code/system which requires you to store or produce a lot of data, it is critical for your algorithm to save the usage of memory wherever possible. For example: While storing data about people, you can save memory by storing only their date of birth, not their age. You can always calculate it on the fly using their date of birth and current date.

Examples of an Algorithm's Efficiency

Here are some examples of what learning algorithms and data structures enable you to do:

Example 1: Age Group Problem

Problems like finding the people of a certain age group can easily be solved with a little modified version of the binary search algorithm (assuming that the data is sorted).

The naive algorithm which goes through all the persons one by one, and checks if it falls in the given age group is linearly scalable. Whereas, binary search claims itself to be a logarithmically scalable algorithm. This means that if the size of the problem is squared, the time taken to solve it is only doubled.

Suppose, it takes 1 second to find all the people at a certain age for a group of 1000. Then for a group of 1 million people,

the binary search algorithm will take only 2 seconds to solve the problem

the naive algorithm might take 1 million seconds, which is around 12 days

The same binary search algorithm is used to find the square root of a number.

Example 2: Rubik's Cube Problem

Imagine you are writing a program to find the solution of a Rubik's cube.

This cute looking puzzle has annoyingly 43,252,003,274,489,856,000 positions, and these are just positions! Imagine the number of paths one can take to reach the wrong positions.

Fortunately, the way to solve this problem can be represented by the graph data structure. There is a graph algorithm known as Dijkstra's algorithm which allows you to solve this problem in linear time. Yes, you heard it right. It means that it allows you to reach the solved position in a minimum number of states.

Example 3: DNA Problem

DNA is a molecule that carries genetic information. They are made up of smaller units which are represented by Roman characters A, C, T, and G.

Imagine yourself working in the field of bioinformatics. You are assigned the work of finding out the occurrence of a particular pattern in a DNA strand.

It is a famous problem in computer science academia. And, the simplest algorithm takes the time proportional to

(number of character in DNA strand) * (number of characters in pattern)

A typical DNA strand has millions of such units. Eh! worry not. KMP algorithm can get this done in time which is proportional to

(number of character in DNA strand) + (number of characters in pattern)

The * operator replaced by + makes a lot of change.

Considering that the pattern was of 100 characters, your algorithm is now 100 times faster. If your pattern was of 1000 characters, the KMP algorithm would be almost 1000 times faster. That is, if you were able to find the occurrence of pattern in 1 second, it will now take you just 1 ms. We can also put this in another way. Instead of matching 1 strand, you can match 1000 strands of similar length at the same time.

And there are infinite such stories...

Final Words

Generally, software development involves learning new technologies on a daily basis. You get to learn most of these technologies while using them in one of your projects. However, it is not the case with algorithms.

If you don't know algorithms well, you won't be able to identify if you can optimize the code you are writing right now. You are expected to know them in advance and apply them wherever possible and critical.

We specifically talked about the scalability of algorithms. A software system consists of many such algorithms. Optimizing any one of them leads to a better system.

However, it's important to note that this is not the only way to make a system scalable. For example, a technique known as distributed computing allows independent parts of a program to run to multiple machines together making it even more scalable.

Inspire

Let's Build the Future

Create

youngcrittersacademy@gmail.com

+1 408-768-1983

© 2024. All rights reserved.